Editor’s Note: This story has been updated to reflect corrections made regarding attributions to the Deepfakes Analysis Unit (DAU) and its partners. An earlier version of the story indicated that the conclusions were made by the tools they used for analysis. The conclusions were drawn from the tools used, and these interpretations were made by the DAU. The story was also updated to correct the description of the DAU, as well as to reflect that it was the DAU that got in touch with their partner, GetReal Labs.

On the morning of his third State of the Nation Address last July, a low-quality video supposedly showing President Ferdinand Marcos Jr. snorting a white powdery substance made the rounds on social media.

Dubbed the polvoron video by its publishers, with the crumbly Filipino dessert used as a euphemism for cocaine, the clip immediately drew questions over its legitimacy. Was it actually Marcos in the video? Or had the video been manipulated using artificial intelligence (AI)?

In 2021, Marcos underwent a drug test shortly after then-president Rodrigo Duterte said a candidate for president was using cocaine. Marcos tested negative for the drug, but his critics continued to circulate rumors of his alleged substance abuse.

At the time of its release, the people behind the polvoron video gave no information about its origins, no details of the “authentication process” it allegedly underwent, and no raw copy made available for the public to scrutinize.

In a joint press conference on July 23, the National Bureau of Investigation and the Philippine National Police stated that results from their video spectral analysis revealed that the tragal notch and the antitragus (both on the outer parts of the ear) of the man in the video and those of the president’s are different.

Without sufficient proof of its factuality, talk about the polvoron video quickly crumbled.

In an age when videos can be easily manipulated and generated with just a prompt and a click, transparency is key to establishing legitimacy.

Given that more information has come to light about the video from its publishers, VERA Files Fact Check continued to take steps to independently verify the video. Here are our findings:

Publishers say video is ‘authenticated’ but shows questionable ‘proof’

VERA Files Fact Check reached out to key figures present at the Duterte-linked Hakbang ng Maisug rally in Los Angeles, California, where the video was debuted, to get more information on its authenticity.

A day before the July 22 LA rally, Harry Roque told a crowd of Maisug supporters in Canada that the forthcoming polvoron video was “not AI” and that it “underwent authentication.”

“Maharlika authenticated it in the U.S. and also by using deepware,” Roque told VERA Files in a Viber message on Aug. 1, referring to Claire Contreras (whose social media name is Maharlika), once an avid pro-Marcos content creator who has now turned against the family.

He did not respond when asked to share certification or an authentication report but directed VERA Files Fact Check to Maharlika’s social media channels.

It was only three weeks later after she premiered the video in LA that Maharlika talked at length about her verification process, in a vlog posted on Aug. 12. She did not state which program she used or who were the forensics experts she consulted with, even though in the same vlog she questioned the credentials of the government’s digital forensics experts.

In the vlog and in a succeeding interview with Sonshine Media Network International (SMNI) , Maharlika said she sought only to prove that the video was “untampered.” She read a printed report from an unknown source that stated the video had not been tampered with, based on five tests.

She could not provide an answer on how she proved that the man in the video was Marcos.

VERA Files Fact Check has reached out to Maharlika on her Facebook pages for comment. One of the messages had been “seen” but got no response.

AI experts find traces of facial manipulation in the video

To independently assess the video, VERA Files Fact Check sought the help of experts in detection of AI-generated and -manipulated media. We reached out to the Deepfakes Analysis Unit (DAU), which is part of the India-based Misinformation Combat Alliance, that verifies harmful or misleading audio and video content produced using AI.

We sent the DAU two copies of the polvoron video: one uploaded by a publisher on July 22, and a better-quality copy that Maharlika herself uploaded on FB weeks later, on Aug. 25.

Results of the analysis on the former provided mostly inconclusive results because of the video’s bad quality. We learned that video quality is important in detection of AI in media.

“Poor quality videos impact the results from the tools as the tools may not be able to accurately pick [up] on AI manipulations in a low quality video,” the DAU told VERA Files Fact Check.

Results of the analyses on Maharlika’s higher-quality video were more conclusive. They indicated possible tampering with the face of the man in the video.

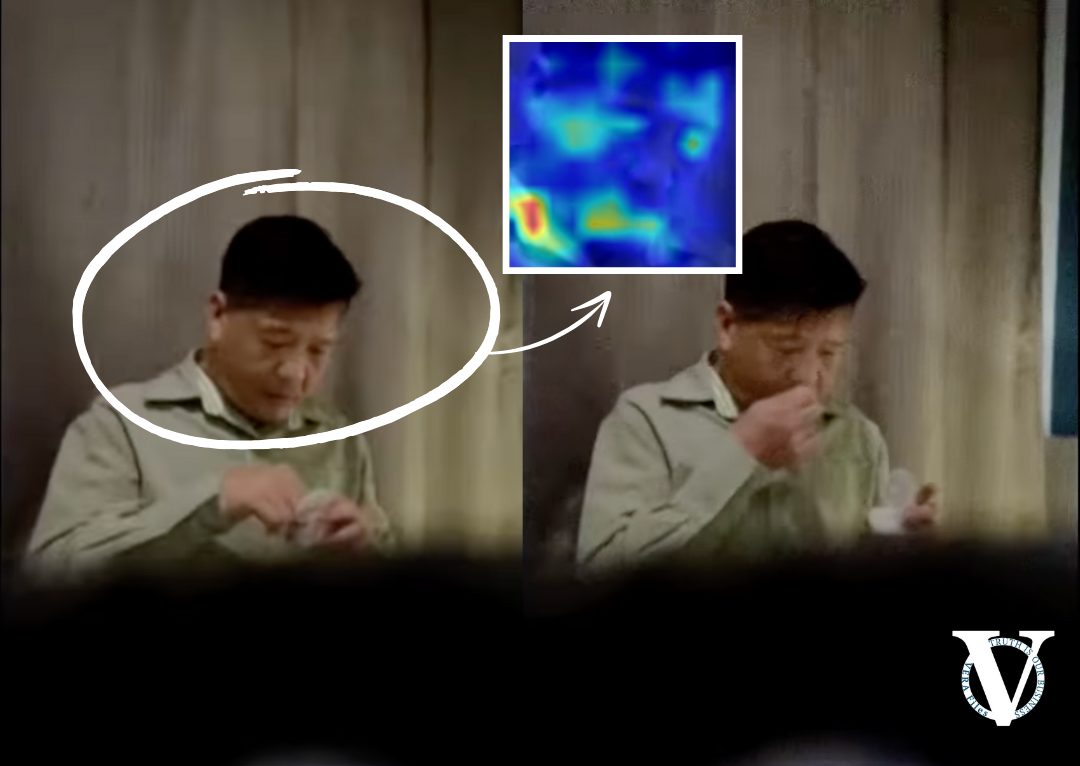

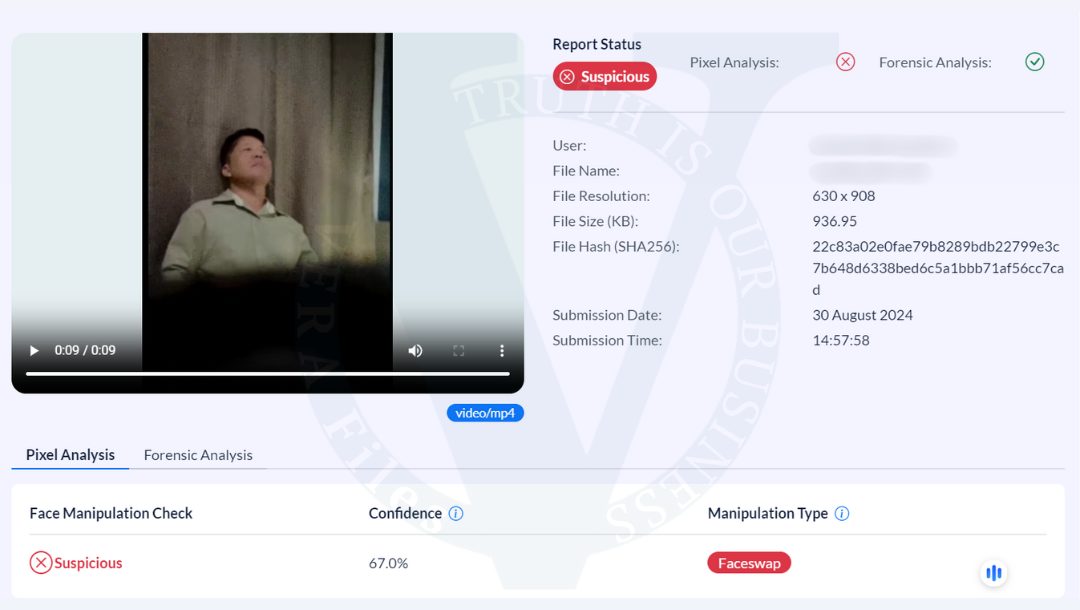

One of the tools the DAU used is called SensityAI, which uses AI to detect video, image or audio deepfakes. Interpreting the results of the tool, the DAU found the video to be suspicious and bearing signs of a type of manipulation called face swap. The DAU told VERA Files Fact Check:

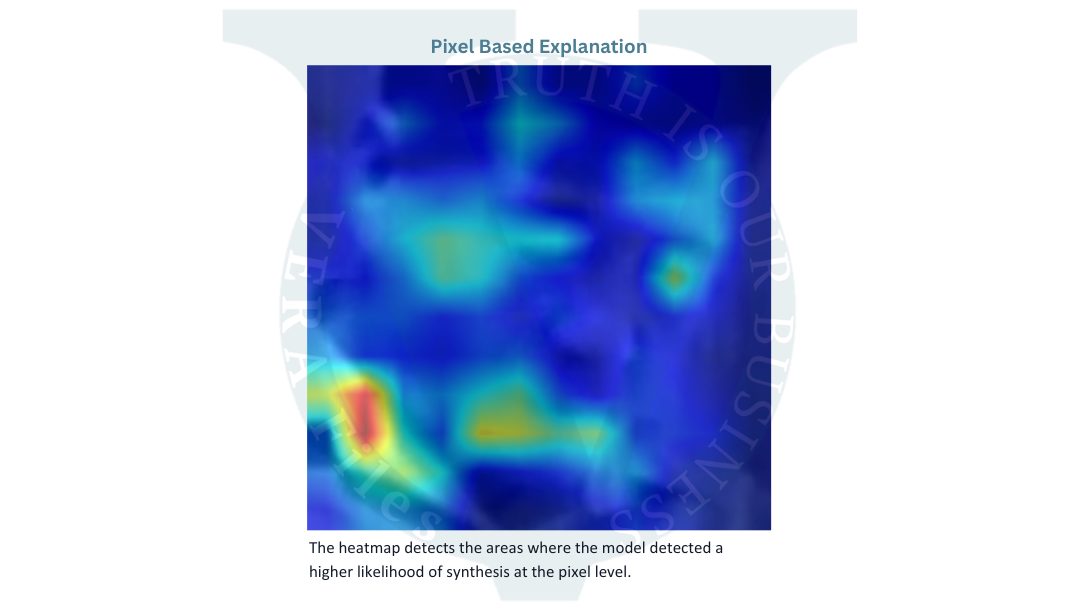

“This image, based on our interpretation, highlights an important visual artefact of faceswaps; that being continuity error. In events of sudden/pronounced movements of the face, or in the event of the face being occluded by something opaque, the face swap visuals get interrupted, revealing bits and pieces of the original face that was superimposed using A.I.

In this image, you can see how the heat signature (most probably depicting real face) is restricted to the lower jaw region, while the rest of the face appears cold (probably referring to the unnatural face that is swapped in). This could refer to the manipulation that is apparent throughout the face, save for the lower jaw region that gives away face swap.”

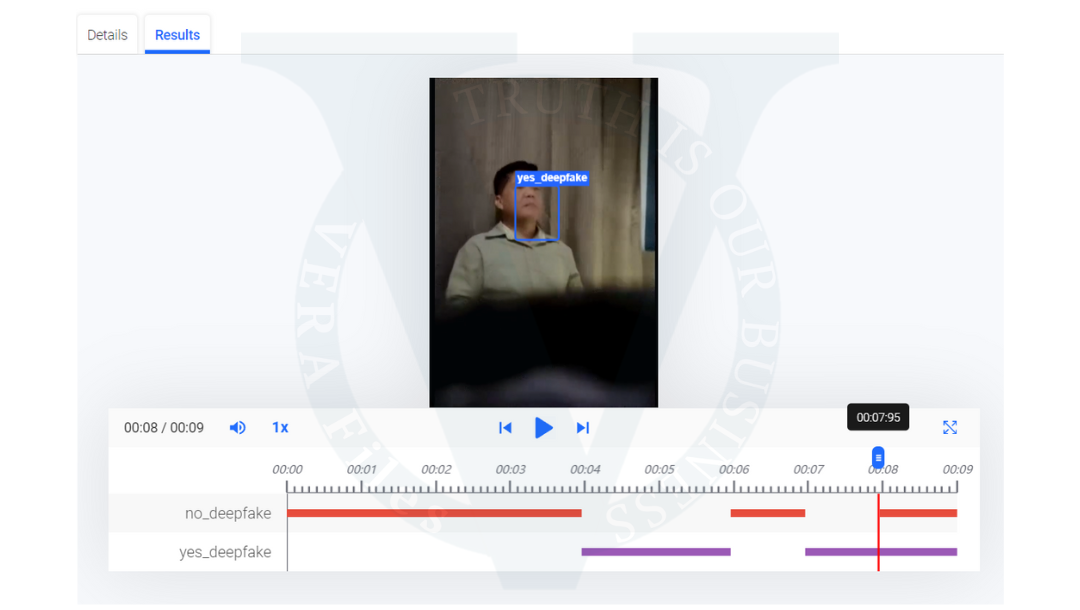

The DAU also analyzed the video using a tool called HIVE, which detects AI-generated text, image, video, and audio. Its interpretation of HIVE’s results indicate the following:

“Traces of manipulation can be found at multiple points of the video’s run-time. But also interesting to note here is the video from the eight-second to nine-second mark where the no-deepfake and yes-deepfake seemingly overlap. This could be due to the tool detecting traces of manipulation, but it being too low to warrant a confident designation of manipulation from the tool itself.”

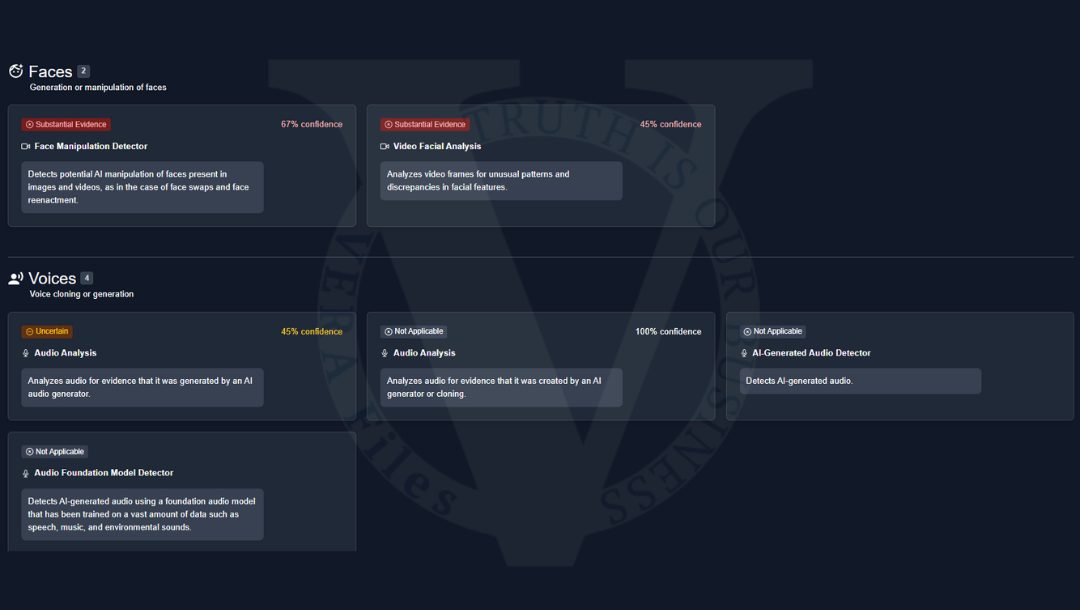

Lastly, the DAU used a third tool called TrueMedia, which identifies political deepfakes in social media using AI. The DAU’s reading of TrueMedia’s results: There were traces of manipulation in Maharlika’s polvoron video.

It gave a 67% confidence rating for detecting potential AI manipulation of the face in the video, and a 45% confidence rating for identifying unusual patterns and discrepancies in facial features.

The DAU also shared analysis from their partner GetReal Labs, which was co-founded by University of California-Berkeley professor Hany Farid and his team specializing in digital forensics and AI detection. They had assessed the earlier, poor quality video, but even then, they told us that they cannot convincingly say that the man in the video was Marcos.

“[T]he human ear is a fairly decent biometric. Because a face-swap deepfake is only eyebrow to chin and ear to ear, the ears can often be used as indication of a fake. Attached is a two-frame animation (1 screenshot from the video, 1 from President Marcos’s recent state of the nation address) in which you can see some differences in the shape of the ear. The structure of the face and the hairline also seem quite different.”

Our learnings

At the time the polvoron video was released in July, it was difficult to come to a conclusion over its legitimacy.

It was easy to poke holes over its authenticity but, at the same time, analyses using AI-detection tools were inconclusive until a higher quality copy of the video was made available. From a fact checking perspective, we couldn’t give a definitive fact-check rating with limited information.

With the midterm elections around the corner, and a rift between the occupants of highest positions in the land growing wider each day, this will probably not be the last time that we will encounter claims like this. Just this month, another claim surfaced alleging that Marcos was hospitalized and had been in critical condition.

In situations like this, we turn to the basics of fact checking. We must ask ourselves two primary questions: Who is the source of the information? Is it true?

If neither of the answers to these questions are definitive, we advise the public to take what they see or hear with a grain of salt.